(1). 前言

前面对HBase Shell进行了学习,这一小篇,主要讲解Java API.

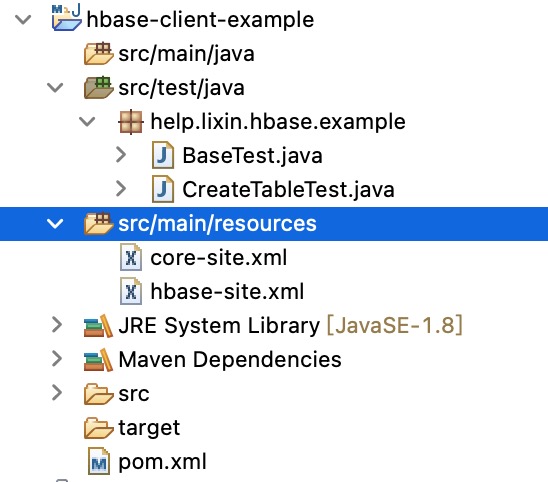

(2). 项目结构如下

(3). 创建表和列簇信息

package help.lixin.hbase.example;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.io.compress.Compression.Algorithm;

import org.junit.Before;

import org.junit.Test;

public class CreateTableTest {

private Connection connection = null;

@Before

public void init() throws Exception {

Configuration configuration = HBaseConfiguration.create();

configuration.addResource(new Path(ClassLoader.getSystemResource("hbase-site.xml").toURI()));

configuration.addResource(new Path(ClassLoader.getSystemResource("core-site.xml").toURI()));

connection = ConnectionFactory.createConnection(configuration);

}

@Test

public void testCreateTable() throws Exception {

Admin admin = connection.getAdmin();

// 定义表名称

TableName userTableName = TableName.valueOf("user");

// 查看表是否存在,如果不存在,则创建

if (!admin.tableExists(userTableName)) {

// 定义表

HTableDescriptor table = new HTableDescriptor(userTableName);

// 定义列簇

HColumnDescriptor info = new HColumnDescriptor("info");

// 设置列簇的压缩方式

info.setCompactionCompressionType(Algorithm.GZ);

// 设置最大的保存的历史版本

info.setMaxVersions(5);

// 给表(user)添加列簇.

table.addFamily(info);

// 创建表和列簇.

// create 'user','info'

admin.createTable(table);

}

}

}

(4). 基本增删改查

package help.lixin.hbase.example;

import java.util.Arrays;

import java.util.List;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.util.Bytes;

import org.junit.Before;

import org.junit.Test;

public class BaseTest {

private Connection connection = null;

@Before

public void init() throws Exception {

Configuration configuration = HBaseConfiguration.create();

configuration.addResource(new Path(ClassLoader.getSystemResource("hbase-site.xml").toURI()));

configuration.addResource(new Path(ClassLoader.getSystemResource("core-site.xml").toURI()));

this.connection = ConnectionFactory.createConnection(configuration);

}

@Test

public void addOrUpdate() throws Exception {

Table table = connection.getTable(TableName.valueOf("user"));

// row-key

Put row1 = new Put(Bytes.toBytes("1"));

// 列簇/列/version/cell

row1.addColumn(Bytes.toBytes("info"), Bytes.toBytes("name"), 1L, Bytes.toBytes("张三"));

row1.addColumn(Bytes.toBytes("info"), Bytes.toBytes("sex"), 1L, Bytes.toBytes("男"));

row1.addColumn(Bytes.toBytes("info"), Bytes.toBytes("pwd"), 1L, Bytes.toBytes("123"));

table.put(row1);

Put row2 = new Put(Bytes.toBytes("2"));

row2.addColumn(Bytes.toBytes("info"), Bytes.toBytes("name"), 1L, Bytes.toBytes("李四"));

row2.addColumn(Bytes.toBytes("info"), Bytes.toBytes("sex"), 1L, Bytes.toBytes("男"));

row2.addColumn(Bytes.toBytes("info"), Bytes.toBytes("pwd"), 1L, Bytes.toBytes("321"));

table.put(row2);

}

/**

* info:name 张三 info:pwd 123 info:sex 男

* info:name 李四 info:pwd 321 info:sex 男

* @throws Exception

*/

@Test

public void batchGet() throws Exception {

Table table = connection.getTable(TableName.valueOf("user"));

Get getOne = new Get(Bytes.toBytes("1"));

Get getTwo = new Get(Bytes.toBytes("2"));

List<Get> gets = Arrays.asList(getOne,getTwo);

Result[] results = table.get(gets);

for(Result result : results) {

List<Cell> cells = result.listCells();

for(Cell cell : cells) {

// 列簇

byte[] columnFamilyBytes = CellUtil.cloneFamily(cell);

String columnFamily = Bytes.toString(columnFamilyBytes);

// 列

byte[] columnQualifierBytes = CellUtil.cloneQualifier(cell);

String columnQualifier = Bytes.toString(columnQualifierBytes);

// 列值

byte[] columnCellValue = CellUtil.cloneValue(cell);

String cennValue = Bytes.toString(columnCellValue);

String format = String.format("%s %s \t", (columnFamily + ":" + columnQualifier) , cennValue );

System.out.print(format);

}

System.out.println();

}

}

@Test

public void testDelete() throws Exception {

Table table = connection.getTable(TableName.valueOf("user"));

Delete delete = new Delete(Bytes.toBytes("2"));

table.delete(delete);

System.out.println();

}

}

(5). core-site.xml(拷贝自hadoop)

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- 指定集群的文件系统类型:分布式文件系统 -->

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

<!-- 指定临时文件存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/Users/lixin/Developer/hadoop/hadoopDatas/tmpDatas</value>

</property>

<!-- 缓冲区大小,实际工作中根据服务器性能动态调整 -->

<property>

<name>io.file.buffer.size</name>

<value>4096</value>

</property>

<!-- 开启HDFS的垃圾桶机制,删除掉的数据可以从垃圾桶中回收,单位分钟 -->

<property>

<name>fs.trash.interval</name>

<value>10080</value>

</property>

</configuration>

(6). hbase-site.xml(拷贝自hbase)

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- 开启集群模式 -->

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<!-- HDFS存储路径 -->

<property>

<name>hbase.rootdir</name>

<value>hdfs://lixin-macbook.local:9000/hbase</value>

</property>

</configuration>

(7). pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>help.lixin.hbase.example</groupId>

<artifactId>hbase-client-example</artifactId>

<version>1.0.0-SNAPSHOT</version>

<packaging>jar</packaging>

<name>hbase-client-example</name>

<url>http://maven.apache.org</url>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.4.13</version>

</dependency>

</dependencies>

</project>

(8). 总结

这一小节,通过HBase client进行了简单的增删改查.相对来说是比较简单的.